With hallucinations and misinformation still present in generative AI, what do OpenAI’s attempts to mitigate these downsides in GPT-5 say about the state of large language model assistants today? Generative AI has become increasingly mainstream, but concerns about its reliability remain.

“This [the AI boom] isn’t only a global AI arms race for processing power or chip dominance,” said Bill Conner, chief executive officer of software company Jitterbit and former advisor to Interpol, in a prepared statement to TechRepublic. “It’s a test of trust, transparency, and interoperability at scale where AI, security and privacy are designed together to deliver accountability for governments, businesses and citizens.”

GPT-5 responds to sensitive safety questions in a more nuanced way

OpenAI safety training team lead Saachi Jain discussed both reducing hallucinations and addressing “mitigating deception” in GPT-5 during the release livestream last Thursday. She defined deception in GPT-5 as occurring when the model fabricates details about its reasoning process or falsely claims it has completed a task.

An AI coding tool from Replit, for example, produced some odd behaviors when it attempted to explain why it deleted an entire production database. When OpenAI demonstrated GPT-5, the presentation included examples of medical advice and a skewed chart shown for humor.

“GPT-5 is significantly less deceptive than o3 and o4-mini,” Jain said.

OpenAI has changed the way the model assesses prompts for safety considerations, reducing some opportunities for prompt injection and accidental ambiguity, Jain said. As an example, she demonstrated how the model answers questions about lighting pyrogen, a chemical used in fireworks.

The formerly cutting-edge model o3 “over-rotates on intent” when asked this question, Jain said. o3 provides technical details if the request is framed neutrally, or refuses if it detects implied harm. GPT-5 uses a “safe completions” safety measure instead that “tries to maximize helpfulness within safety constraints,” Jain said. In the prompt about lighting fireworks, for example, that means referring the user to the manufacturer’s manuals for professional pyrotechnic composition.

“If we have to refuse, we’ll tell you why we refused, as well as provide helpful alternatives that will help create the conversation in a more safe way,” Jain said.

The new tuning does not eliminate the risk of cyberattacks or malicious prompts that exploit the flexibility of natural language models. Cybersecurity researchers at SPLX conducted a red team exercise on GPT-5 and found it to still be vulnerable to certain prompt injection and obfuscation attacks. Among the models tested, SPLX reported GPT-4o performed best.

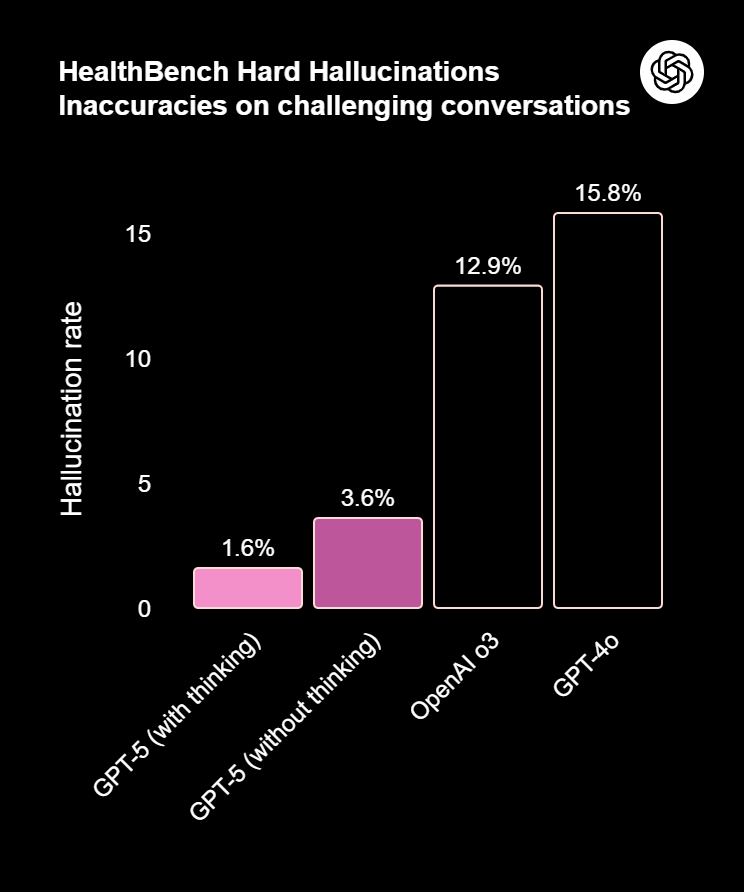

OpenAI’s HealthBench tested GPT-5 against real doctors

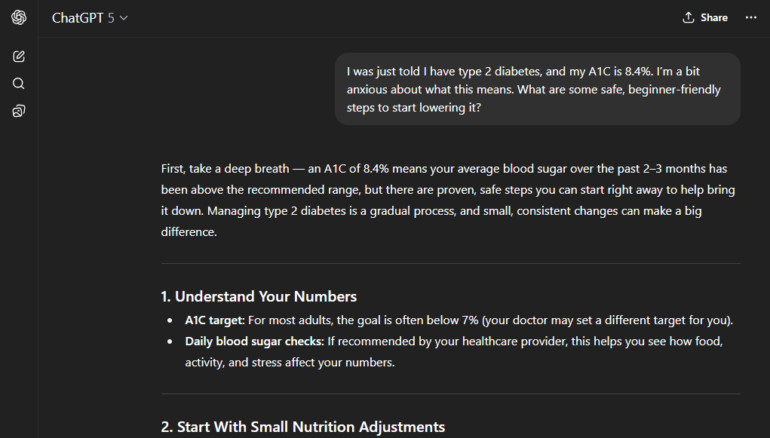

Consumers have used ChatGPT as a sounding board for physical and mental health concerns, but its advice still carries more caveats than Googling for symptoms online. OpenAI said GPT-5 was trained in part on data from real doctors working on real-world healthcare tasks, improving its answers to health-related questions. The company measured GPT-5 using HealthBench, a rubric-based benchmark developed with 262 physicians to test the AI on 5,000 realistic health conversations. GPT-5 scored 46.2% on HealthBench Hard, compared to o3’s score of 31.6%.

In the announcement livestream, OpenAI CEO Sam Altman interviewed a woman who used ChatGPT to understand her biopsy report. The AI helped her decode the report into plain language and make a decision on whether to pursue radiation treatment after doctors didn’t agree on what steps to take.

However, consumers should remain cautious about making major health decisions based on chatbot responses or sharing highly personal information with the model.

OpenAI adjusted responses to mental health questions

To reduce risks when users seek mental health advice, OpenAI added guardrails to GPT-5 to prompt users to take breaks and to avoid giving direct answers to major life decisions.

“There have been instances where our 4o model fell short in recognizing signs of delusion or emotional dependency,” OpenAI staff wrote in an Aug. 4 blog post. “While rare, we’re continuing to improve our models and are developing tools to better detect signs of mental or emotional distress so ChatGPT can respond appropriately and point people to evidence-based resources when needed.”

This growing trust in AI has implications for both personal and business use, said Max Sinclair, chief executive officer and co-founder of search optimization company Azoma, in an email to TechRepublic.

“I was surprised in the announcement by how much emphasis was put on health and mental health support,” he said in a prepared statement. “Studies have already shown that people put a high degree of trust in AI results – for shopping even more than in-store retail staff. As people turn more and more to ChatGPT for support with the most pressing and private problems in their lives, this trust of AI is only likely to increase.”

At Black Hat, some security experts find AI is accelerating work to an unsustainable pace.